Getting started with NLG features

Enabling prompts#

Prompt engineering can be enabled via the Feature flags widget by checking the Enable prompt management option.

Adding an LLM integration#

To run any of our NLG features your namespace will need an integration with one of our supported generative model providers:

- OpenAI integration

- Vertex integration

- Co:here

- We also support custom integrations

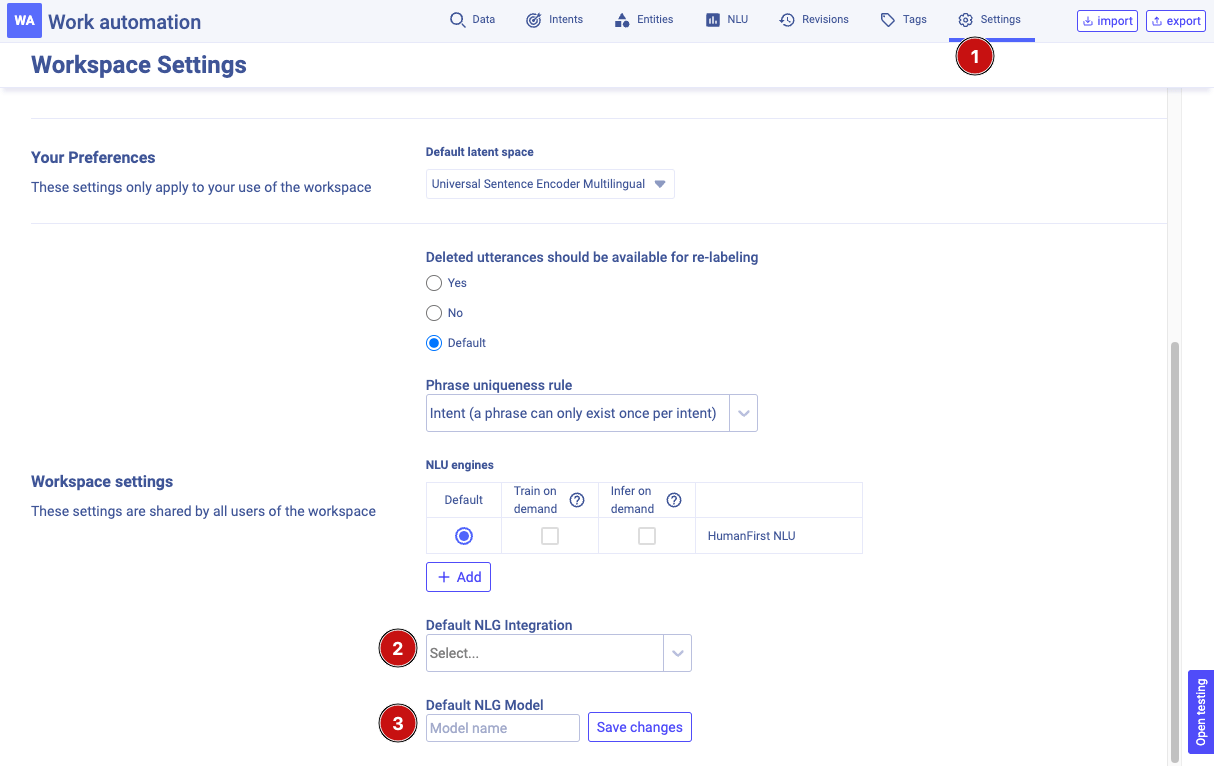

Specifying the workspace default NLG model#

Once you have added the required integrations to your namespace, you will need to specify the integration to use for NLG features in your workspace.

- Open the workspace settings

- Select the integration to use

- (optional) specify which generative model to use.

;

;

Troubleshooting#

If you have not properly configured your workspace for NLG features, you are likely to see one of the two errors below:

No generative integration found#

You have not configured an integration that provides generative models. See integrations for more details.

No default generative integration set#

You have not specified the default NLG model to be used by the workspace. See Specifying the workspace default NLG model