Prompt content

note

Beta feature

HumanFirst prompt engineering features are currently available on demand only.

Prompts let you interact with generative models using natural language to execute a variety of operations on your data.

Writing prompts#

Prompts are instructions written using natural language:

Example of a basic prompt

Prompts + data#

Prompts really shine when used with your data. To do so, start by stashing some data, then write a prompt using the HumanFirst template placeholders to inject the selected data where desired.

The example prompt below will summarize all the conversations within the stash:

Example of prompt + data

Template language#

HumanFirst provides basic templating features to better leverage your data along with prompts. The various template features can be used to inject data at various parts of your prompt (see the example above using the conversation placeholder).

All template placeholders must be enclosed in a pair of double curly braces {{ }}.

{{ text }}uses the text of the provided utterance(s).{{ conversation }}uses the conversation from which the provided utterance(s) originated.{{ sourceConversation }}uses the original conversation from which a generated example originated.{{ meta "<key>" }}will inject the metadata value for "key" from the provided utterance.

Merging the stash#

When a prompt is run against stashed data (using the template language above), by default it will be run in isolation with each of the stashed utterances.

It is also possible to run the prompt against a Merged stash, which will take all the utterances within the stash and inject them into the prompt as one large piece of content.

Example use case for merge stash#

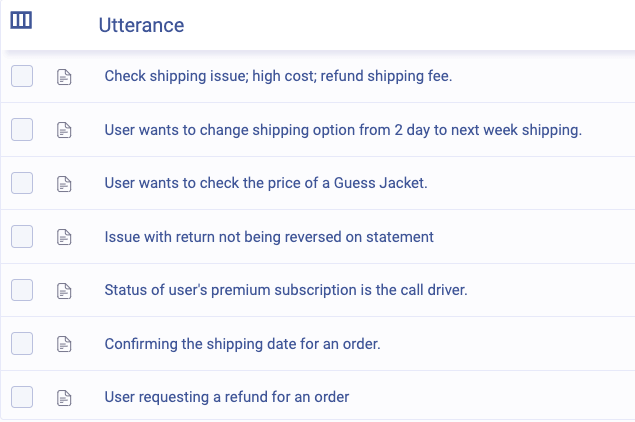

Cases requiring a standardization of the generated output are great for a merged stash run. For example, a first prompt could be used to extract the call driver from conversations. This would yield a variety of short answers explaining why a customer called customer support. However, the problem with the first results is that there is no guarantee that the generative model will phrase similar call drivers in the same way. For example Call about shipping status, Needed shipping status, ...etc, are all ways a generative model would respond to a call where a user was inquiring about their shipment status.

A second prompt could be run on all the output of the first prompt. This second prompt would be used to standardize all the call drivers extracted before. This standardized format can be very useful to quickly identify similar use-cases.

This first prompt was used to extract call drivers from each of the 60 selected utterances:

;

;

The second prompt was used against all 60 responses from the previous prompt, along with the merge stash feature on, to standardize the 60 response output into 12 tags:

;